A while back I had a client who requested a data dump of a SQL Server database to a set of CSV files (one for each table). I whipped up a PowerShell script to do it using sqlcmd.exe, and I thought I'd post it here in case someone else found it useful (or I wanted to remember how to do it someday).

(I later found out that they wanted the CSV files for manual one-at-a-time import into MySQL; the whole process could have been accomplished with about ten minutes of work in MySQL Workbench. If you've already got a programmer available, always explain what your end goal is (even if you, yourself, are a programmer); they might know of a better way.)

Here's the script:

$tableQuery = @"

SET NOCOUNT ON

SELECT sobjects.name

FROM sysobjects sobjects

WHERE sobjects.xtype = 'U'

"@

$sqlcmd = "C:\Program Files\Microsoft SQL Server\110\Tools\Binn\sqlcmd.exe"

$server = ".\SQLEXPRESS"

$db = "[dbname]"

$arguments = @("-S", $server, "-d", $db, "-E", "-h", "-1", "-W", "-Q", "`"${tableQuery}`"")

$tables = & $sqlcmd $arguments

$tables | select @{Name="filename";Expression={"$_.csv"}}, @{Name="query";Expression={"SET NOCOUNT ON`nSelect * From $_"}} | % {

$arguments = @("-S", $server, "-d", $db, "-E", "-s", "`",`"", "-W", "-Q", "`"$($_.query)`"")

(& $sqlcmd $arguments) > $_.filename

}

First we set up the query to list all the tables in the database. Filtering by xtype of 'U' gives us the just the user tables in the database. If we wanted, we could also do further filtering on; for example, if we didn't want to include the ASP.NET Identity tables, we could add something like And sobjects.name not like '%asp%'.

The next few lines are just the location of sqlcmd.exe, and the database server and name to pass to all the commands. I'm running all this stuff as a trusted user (the -E in the sqlcmd options), so I don't have to worry about setting up the username and password.

Now comes the the hard part; passing arguments from PowerShell to older DOS command-line utilities can be... challenging to get right. Here, I'm building an array of all the arguments; when I pass it as an argument to & $sglcmd, PowerShell automatically expands it and passes the arguments correctly. It's possible there's a simpler way to do it, but this works well enough. The arguments of interest:

-h -1 tells sqlcmd not to print the headers at all. I just need the list of table names; I don't need headers.-W removes trailing whitespace-Q will run the query and then exit sqlcmd. As opposed to -q, which runs the query and keeps sqlcmd open.

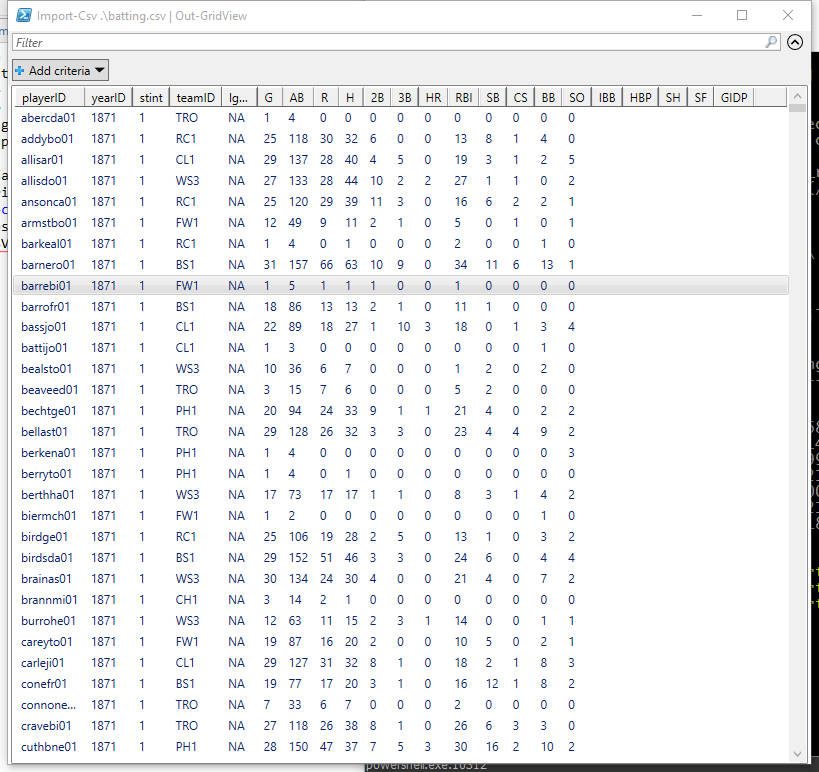

We retrieve the list of tables and pipe it into a Select-Object command which uses the table name to generate a .csv file name and a Select * From query. Those properties are piped into a For-Each which executes each SELECT statement and dumps the results into the CSV file. This time around, we change a couple of the sqlcmd options:

-s "," tells sqlcmd to use a comma as the column separator- We drop the

-h -1 because we want headers on the tables; the sqlcmd default is to print them once for each query, which is what we want in this case

Happy data wrangling!